April 30, 2025

In the digital world,

AI governance is not an obligation, it’s a competitive differentiation

Does your company’s AI governance just focus on compliance & risk reduction?

The scope and speed of new AI developments including GenAI and Agentic AI is far outpacing the AI governance tools necessary to deploy it safely and securely while still maximizing the business value returns from its successful deployment.

With any new disruptive technology like GenAI and Agentic AI, the initial reaction is to focus exclusively on the operational, compliance, and reputational risk elements of adopting and utilizing it. While these are critical governance issues that need to be addressed, they are only half of the overall AI governance equation.

Kurt Muehmel, head of AI strategy at Dataiku says it this way, “Governance is a strength that ensures that AI is aligned with business objectives, is produced efficiently, follows internal best practices, is designed for production from beginning to end, and promotes reusing components. AI governance thought of this way becomes not an obligation but a competitive differentiator.”

To be clear, AI governance is the responsibility of the entire organization from the Board, to the C-Suite decision makers, to the line of business users, not just the IT shop. This requires that all these stakeholders have sufficient AI governance literacy that enables them to see how the company can use it both defensively and offensively.

A strategic AI governance framework can generate business value and drive digital transformation

Finding the right balance between using AI governance defensively and offensively requires thinking about it as a business-value-creation, strategic asset not just a risk-and-compliance, operations asset. Both roles are critical and must be carried out with equal focus and commitment. As such, the best approach is both/and not either/or.

A good place to start is to clearly define and agree upon a companywide AI governance framework that is aligned with the organization’s values and core principles. This framework will establish clear and responsible guidelines that are resilient, ethical, trustworthy, and safe for everyone to use. Here are some use case questions that would go into this framework:

- Can employees use public AI chatbots or only secure, company-approved tools?

- Can business units create and deploy their own AI agents?

- Can sales and marketing use AI-generated images?

- Should humans review all AI output or are reviews only necessary for high-risk use cases?

- Who gets to decide if a particular use case meet’s the company’s guidelines, and who gets to enforce that decision?

79% of C-suite executives surveyed by Deloitte say that AI, GenAI, and Agentic AI will drive substantial transformation within their organization and industry over the next three years. For companies to gain competitive differentiation and increased business value from these transformative outcomes requires them to utilize AI governance as a strategic asset not just an obligatory risk & compliance asset.

A good first step is to create a cross functional AI governance team

The biggest mistake most companies make is to assign AI governance to their IT shop instead of realizing that it is the responsibility of the entire organization. Since AI will impact almost every employee, it is critical to get their input, alignment, and support for how the company plans to adopt and deploy it.

This not only pertains to policy setting but also the broader transformation in how work gets done. For example, while AI can automate proofreading in sales and marketing documents, it then creates new governance requirements such as verifying source material, claims and compliance with brand and legal standards.

Intuit put together a multidisciplinary team to create its enterprise-wide AI governance policies. “The team includes people with expertise in data privacy, AI, data science, engineering, product management, legal, compliance, security, ethics, and public policy” according to Liza Levitt, VP and deputy general counsel.

A major role for a team like this is to increase the overall knowledge of AI across the company. This can start by creating and launching an AI literacy and awareness program to educate the organization – what it is, what they must be prepared to do to get real business value from it, and how to guard against data privacy and compliance risks. As I have written about before, one of the biggest barriers to the adoption and utilization of GenAI & Agentic AI is from employees who think they will lose their jobs.

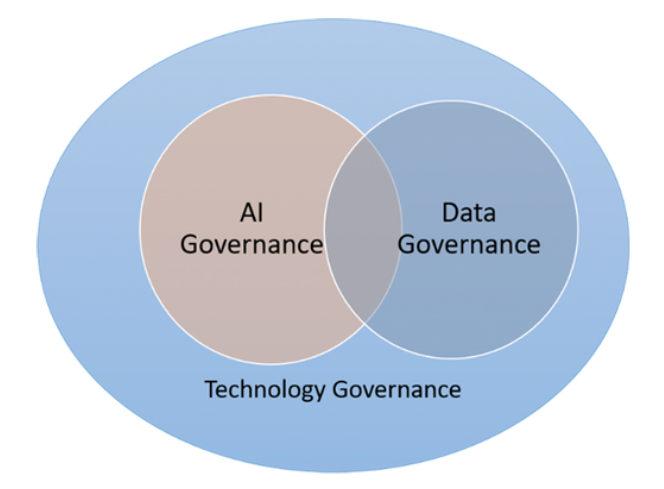

Good AI governance requires good data governance

A recent IDC survey documented that:

- 70% of CIOs reported a 90% failure rate for their custom-built GenAI projects

- 66% reported a 90% failure rate with vendor-led proofs-of-concepts

Here are some of the major sources of the 90% failure rate:

- Lack of sufficient quantity of data – AI needs a large amount of good, easily accessible data to be trained to learn from it

- Lack of sufficient quality of data – “garbage in, garbage out”

- Underestimating the time and cost of aggregating data from multiple different sources around the company (sales, marketing, legal, finance, IT, HR, procurement)

- Absence of an enterprise data governance policy

A great deal of the data that AI learns from comes from outside your organization, like industry trends, new competitive products and services, and many other factors that impact the performance and value creation potential of your company. And since most of the data in your company resides within its security perimeter, there is no AI model that has been trained on that unique dataset, so it has not even been mined for its insights.

To get the most competitive advantage out of the successful deployment of AI, you must be able to curate all the different types and sources of data into one metadata resource. This resource must be easily accessible to your different AI tools while still having guardrails to make sure it is used safely and does not violate privacy or ethical policies.

As one CIO said to me “AI may be the engine, but data is the oil.” Treating data as a revenue-generating asset and ensuring seamless access and useability to it will drive multiple ways to significantly increase new business value creation including:

- Build customer trust and increase engagement through personalization engines and ethical AI

- Improve services by using predictive AI to anticipate customer needs and reduce churn

- Accelerate product development with AI-powered market insights

- Help businesses stay ahead with cross-industry collaboration & strategic data sharing

Implementing an AI governance framework

Gartner surveyed IT and Data Analytics leaders and found that only 46% had an AI governance framework implemented while 55% said their organization had not yet implemented an AI governance framework.

As you embark on implementing your AI governance framework, it is good to remember that governance is about action and accountability. Its activities cover a diverse set of functions including planning, process, approvals, monitoring, remediation, and auditing. It needs to be embedded in every AI project.

Here are some basic principles to follow in developing and implementing an AI governance framework and set of policies:

- Clear accountability through oversight mechanisms and responsible usage policies

- Full transparency & explainability by making AI systems understandable, providing explanations of how they arrive at their decisions, and promoting trust in their outputs

- Fairness and Ethics by implementing measures to identify and mitigate bias in AI data, algorithms, and models to ensure fair and equitable outcomes

- Risk management & compliance oversight by identifying and assessing potential risks associated with AI systems and tools; implementing risk mitigation programs; continuously monitoring and evaluating AI systems to ensure they are performing as intended; ensuring relevant AI systems comply with relevant legal and regulatory requirements

When developed and deployed thoughtfully, AI governance can be a true competitive differentiation not just a check the box obligation.

|